9 Best Ways to Detect Bots on Your Website, Apps, and APIs

- 1 9 best ways to Detect Bots on your website, apps, & APIs

- 1.1 Observe your website activity.

- 1.2 Increase in the number of views on a page

- 1.3 Session durations will vary wildly.

- 1.4 High bounce rates

- 1.5 Activity from unknown locations

- 1.6 Deploy CAPTCHA challenges

- 1.7 Monitor user behavior

- 1.8 Use Web Application Firewalls (WAFs)

- 1.9 Check User agents

- 1.10 Add Multi-Factor Authentication (MFA)

- 1.11 Invest in software solutions.

- 1.12 Implement rate limiting

- 1.13 Use honeypots

- 2 Identify bots accurately and filter them out to protect your digital spaces from them.

38% of fraudulent activities recorded in 2020 were automated bots and bot fraud. With nearly half of the activity on the Internet being credited to bots, it becomes challenging for businesses to protect themselves against fraud.

Not all bot activity is harmful; you need bots on your website for various functions. But it’s important to distinguish between good and bad bots and protect your digital presence from them.

Below are some preventive and protective measures you can deploy to detect bots on your website, apps, and APIs and protect your digital presence from them.

9 best ways to Detect Bots on your website, apps, & APIs

While good bots perform functional routine tasks and help you collect information data, bad bots intend to disrupt the speed and flow of operations on your website, steal confidential data and mislead your analytics.

A data breach in 2020 impacted 8,000 small business owners, causing hefty monetary damage. Detecting bots and identifying good and bad ones is the first step in preventing severe security threats.

Identification and protection from bad bots keep your website, apps, and APIs functioning smoothly. It helps reduce your IT costs, enhances the UX for your customers, and, most importantly, helps you stay compliant with data protection frameworks such as GDPR.

Let’s look at some methods to help you identify harmful bots and protect your website.

Observe your website activity.

One of the most common ways to identify bot activity is to take a simple look at the current activity of your websites, apps, and APIs. Bot activity will manifest as abnormal behaviour on your site that may look harmless at first glance.

DataDome has an excellent guide on the identification of and protection from harmful bots that dive into this particular topic. Here are some abnormal behaviours on websites that are usually associated with bot activity:

Increase in the number of views on a page

A particular product or blog from you may have gone viral and received many views quickly. However, if a page gets unexpected attention without any marketing push, it is probably a bot activity.

The goal of this operation is to overwhelm your servers. You will see a spike in page views in your analytics.

Session durations will vary wildly.

If a human user opts out of your website, their session duration will still be a few seconds. This is simply because of the way humans process information and act on impulses.

If they’re intrigued by your content and stay on your website, it will be, at most, a few minutes. They may stay longer, but then they will be idle with minimal activity.

So, if you see any session durations that last less than a few seconds, i.e., milliseconds, and longer than a few minutes, it can be bot activity.

High bounce rates

We talked about bots ending sessions within milliseconds in the previous point. When bots in hoards end their sessions in milliseconds, they contribute to an abnormally high bounce rate on a website.

This is because bots work exponentially faster than humans. They will accomplish their task within milliseconds and leave the website after causing harm. This is why sessions of milliseconds are a telltale sign of bot activity.

Activity from unknown locations

Receiving an influx of activity from locations your business has never operated in is fishy and could be because of bots. For example, if your business is established in New York City and you suddenly receive high activity on your website from Sweden, it’s a cause for concern.

The idea behind this is to once again overwhelm your servers with an activity that amounts to nothing. You may end up chasing those requests, wasting your time and resources.

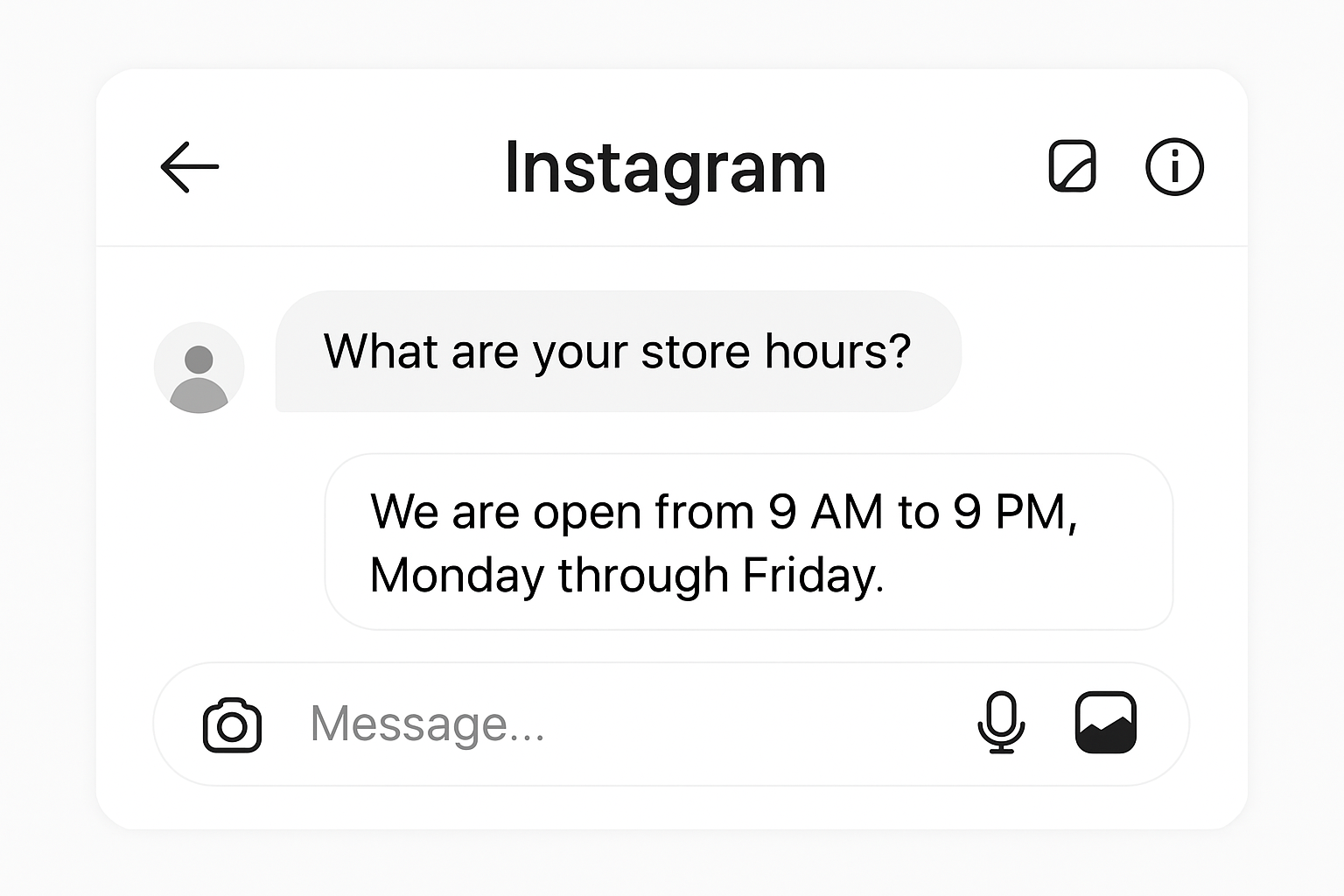

Deploy CAPTCHA challenges

CAPTCHA stands for ‘Completely Automated Public Turing test to tell Computers and Humans Apart’. It’s a challenge-response test used to differentiate between human and bot users. They can be text-based, sound-based, or image-based.

The test aims to identify humans from bots by presenting them with a challenge that would be difficult for bots to solve and relatively easy for humans.

Bots are increasingly adept at overcoming CAPTCHA challenges, but they are still a common form of protection companies use.

Monitor user behavior

Bots try to emulate human behaviour to blend in and escape identification. However, they are pretty distinguished from human users simply because they are swift.

This is why even when they perform activities akin to human behaviour, such as submitting a request, they do it too fast to stand out.

So, monitor user activity and behaviour to see which user is swift and performs a set/repetitive pattern of activities. This will help you single them out and follow them.

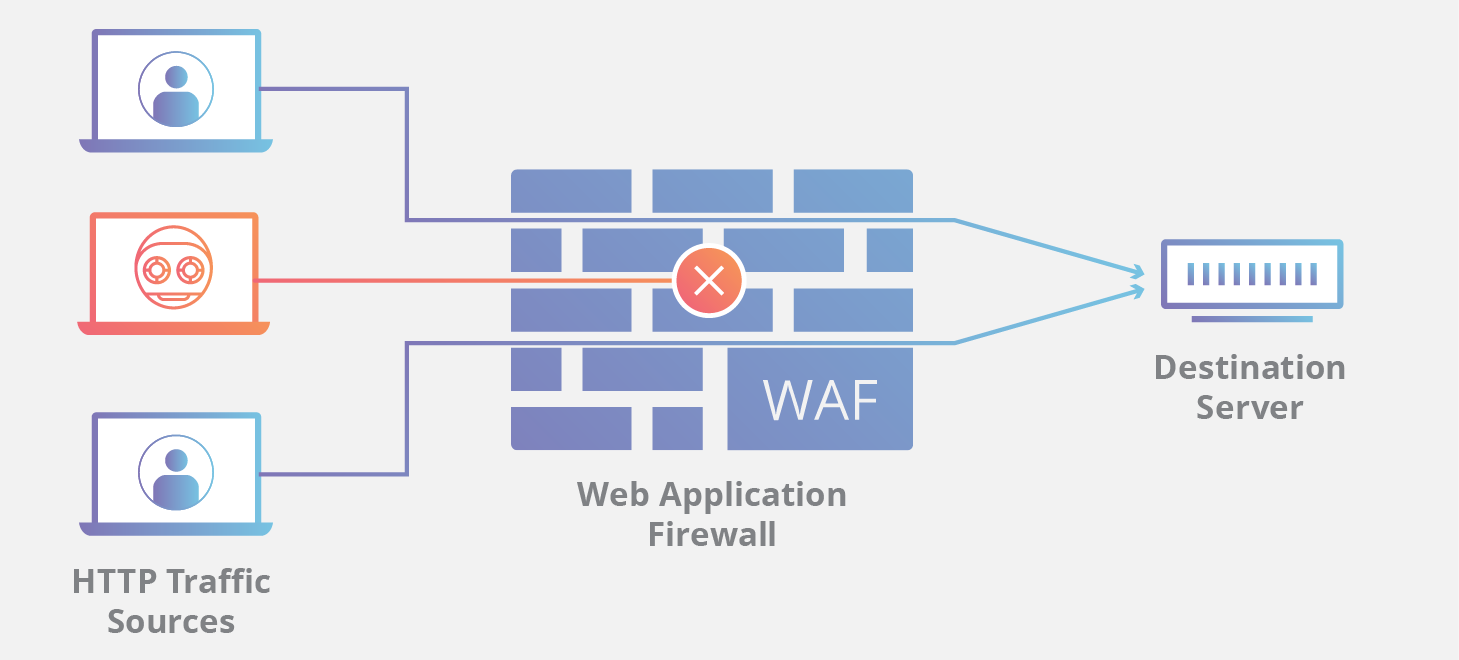

Use Web Application Firewalls (WAFs)

Web Application Firewalls (WAFs) deploy rules to filter out good and bad bot traffic. They prevent known attacks such as SQL injections and cross-site scripting. The firewall scans the traffic for familiar signatures linking to known attacks.

This is why WAFs can only block familiar threats. New threats that have yet to have their pattern discovered and documented can bypass the WAFs. Even if their activity is suspicious, the firewall will record that as a human user fumbling.

Check User agents

A user agent is classified as a computer program or software that represents a person. In web surfing, a browser will be considered a user agent for a human using it. It provides information about the type of device that was used to access your website.

Check the user agent information for all the users that visit your website, app, or API. Bots tend to use user agents that are not typically associated with web browsers or devices. This means they may be using an older version of a browser.

Human users usually use the most updated forms of browsers or applications on their mobile devices. You can identify human and bot users by checking the user agent information of all the users that use your website.

Add Multi-Factor Authentication (MFA)

Multi-factor authentication (MFA) is a process that helps you secure a user’s account on your web portals. If you allow user accounts on your websites and applications, ask them to opt into MFA. It protects users from credential-stuffing attacks and hacking of their accounts.

The limitation of this option is that many users don’t bother to opt into MFA. They may feel you are putting the onus on them to protect themselves instead of doing something on your end.

You can still make it work by making it a requirement to opt into MFA whenever a user creates an account. Then, it doesn’t stick out as an added responsibility but as a part of the process while making an account.

Invest in software solutions.

One of the most prominent ways to detect and protect against bots is to invest in software solutions that detect and mitigate bots. They protect your website, applications, and API from various attacks.

Moreover, they constantly collect real-time data to adapt and fight against bots that harness newer technologies to escape detection. Software solutions are faster, more agile, and more efficient than individual methods.

Implement rate limiting

Rate limiting refers to limiting the requests you can receive on a feature or by a single user/IP address. This way, bots cannot make requests in hoards and overwhelm your servers.

This technique doesn’t disrupt human users’ activity as they will likely not make requests in large numbers anyway.

Use honeypots

As the name suggests, honeypots are decoy pages meant to attract bots. This method lets the bot inside your website and lets it operate as it intended. However, it feeds the bot false or fake information.

Alternatively, you may redirect the bot to another page similar to your webpage but entirely fake. You can perform identification tests on the bot on the decoy page and take measures to remove it without it getting its hands on any valuable information from you.

Identify bots accurately and filter them out to protect your digital spaces from them.

Bot activity is part and parcel of operating online. While you need good bots to perform important routine tasks, you must also weed out bad bots from harming your website and customers.

Observe the behaviour of users on your website to distinguish human users, good bots, and bad bots. You may secure your user accounts immediately or set up honey traps to lure and capture bots.

CAPTCHA tests and agile software solutions have emerged as standard safety measures.

Let us know in the comments what you think is the best way to identify and eliminate bad bots.